What Is Large Language Model (LLM) Security?

Large language model (LLM) security refers to measures and methods employed to safeguard AI systems that generate human-like text. As these models grow, they become integral to various applications. Consequently, they also present potential vulnerabilities that attackers might exploit. Security frameworks aim to mitigate threats to data privacy, ethical standards, and potential misuse by enforcing strict protocols and monitoring systems.

Implementing LLM security involves integrating offensive and defensive strategies during the model’s development lifecycle. This includes careful management of the training data, deploying secure infrastructure, and regularly updating systems to defend against emerging threats. It is crucial to adopt a proactive approach that anticipates security challenges rather than reacting to them after-the-fact. Protecting LLMs is vital for maintaining user trust and ensuring the safe integration of these models into different contexts.

This is part of a series of articles about AI security.

In this article

Why LLM Security Matters: Impact of LLM Security Concerns

Data Breaches

Data breaches in LLMs arise when unauthorized entities access sensitive information used or generated by these systems. Given that LLMs are often trained on vast datasets, they may inadvertently store or reveal confidential information, leading to privacy violations. Entities that deploy LLMs must establish stringent access controls, encrypt data, and monitor system activities to detect potential breaches promptly.

Furthermore, organizations should be aware of the implications of data breaches specific to LLMs, such as leaked data or misuse of sensitive outputs. Establishment of policies for regularly updating datasets and protocols can mitigate risks and improve data security. Vigilance in monitoring potential breach vectors and acting swiftly upon detection is also vital to protect sensitive information effectively.

Model Exploitation

Model exploitation occurs when adversaries manipulate LLMs to produce harmful outputs or engage in unauthorized activities. Attackers can exploit vulnerabilities within the model, such as manipulating inputs to cause the model to output misleading or harmful information. Safeguarding against these exploitations requires diverse strategies like model validation, adversarial testing, and continuous monitoring of outputs for anomalies.

Enforcing a security framework that includes ethical guidelines and technical safeguards is essential. Regular audits, threat simulations, and adoption of secure development practices can reduce the likelihood of model exploitation.

Misinformation

Misinformation through LLMs is a significant risk because these models can inadvertently create and spread false narratives. The high-quality, persuasive text generated by LLMs can mislead users, amplify false information, and contribute to social harm. Developers of LLMs must prioritize accuracy and contextual understanding, ensuring that models are trained to discern and reduce the spread of misinformation.

To curb misinformation, implement monitoring systems that validate the accuracy of outputs against trusted sources. Building feedback loops where user interactions help in refining the model can also enhance its reliability. Transparency in model function and output explanations can foster user awareness and trust, reducing the potential impact of misinformation.

Ethical and Legal Risks

LLMs introduce ethical and legal risks by potentially infringing on privacy, perpetuating biases, or generating inappropriate content. The ethical deployment of LLMs mandates strict adherence to legal standards, user consent, and data protection regulations. Developers must be aware of the model’s potential to reinforce stereotypes or yield harmful outputs when misconfigured or improperly monitored.

Mitigating these risks requires training of LLMs with diverse datasets to enhance fairness and minimize biases. Regular ethical audits and consultations with legal experts ensure compliance with evolving standards. Users and developers should engage with continuous education on ethical AI practices to align model deployment with societal expectations and legal frameworks.

Use of LLMs in Social Engineering and Phishing

Large language models (LLMs) are increasingly being exploited in social engineering and phishing attacks due to their ability to generate highly convincing and personalized content at scale. Attackers can use LLMs to automate the creation of phishing emails, making it easier to launch targeted campaigns that are more difficult to detect and prevent.

For instance, LLMs can be used to generate business email compromise (BEC) attacks, where the model impersonates high-ranking executives and requests sensitive information or financial transactions. These emails often include subtle variations in phrasing and content, making them harder for traditional security filters to detect. The sophistication of LLMs allows them to mimic the tone and style of legitimate communications, increasing the likelihood that the target will fall for the scam.

To defend against these LLM-driven social engineering and phishing attacks, organizations must employ advanced threat detection systems that can identify patterns typical of LLM-generated content. Techniques like text embedding and clustering can help differentiate between legitimate and malicious emails by analyzing the semantic content of the text. Additionally, incorporating multi-phase detection models that evaluate the likelihood of AI-generated content and cross-reference it with sender reputation and authentication protocols can significantly reduce the success rate of such attacks.

Related content: Read our guide to anti-phishing.

Tal ZamirCTO, Perception Point

Tal Zamir is a 20-year software industry leader with a track record of solving urgent business challenges by reimagining how technology works.

TIPS FROM THE EXPERTS

- Carry out “red teaming” exercises: This real-world stress testing can reveal unknown vulnerabilities, especially when models interact with user-generated content.

- Implement robust logging with anomaly detection for prompt injection: For prompt injection detection, log all input-output pairs and utilize anomaly detection models that can flag unusual output patterns indicative of injection attempts. This allows for early detection of subtle prompt injection tactics that could bypass standard filters.

- Segment and audit permissions within LLM applications: Treat LLM-driven applications as “zero-trust” systems internally. Segment permissions between components and limit privileges. Audit these permissions regularly, ensuring that no component has unintended access to sensitive data or functions.

- Utilize role-based access for LLM interactions: Control which users or systems can access specific LLM functionalities based on role-based access controls (RBAC). For instance, limit high-risk operations or sensitive queries to users with verified privileges, minimizing the impact of insider threats.

- Deploy context-aware output filtering: Beyond general output sanitization, use context-aware filtering to dynamically adjust restrictions based on the application’s specific use case. For example, stricter filters may be necessary in financial contexts to avoid unauthorized advice generation, whereas medical contexts might need different types of scrutiny.

OWASP Top 10 LLM Cyber Security Risks and How to Prevent Them

The following were identified by the Open Web Application Security Project (OWASP) as the ten most significant security threats facing large language models. Refer to the original OWASP research for more details.

Prompt Injections

Prompt injections occur when an attacker manipulates an LLM by feeding it carefully crafted inputs, causing the model to execute unintended actions. This can be done directly, by bypassing system prompts (often referred to as “jailbreaking”), or indirectly, by embedding malicious prompts in external sources that the LLM processes. The impact of such an attack can range from data leakage to unauthorized actions performed by the LLM on behalf of the user.

Prevention strategies:

- Enforce strict access controls and privilege management for LLMs.

- Implement content segregation by distinguishing between trusted and untrusted inputs.

- Introduce a human-in-the-loop for critical operations to ensure oversight.

- Regularly monitor LLM inputs and outputs to detect unusual activity.

Insecure Output Handling

Insecure output handling refers to the failure to properly validate and sanitize LLM-generated outputs before passing them to other systems or users. If the LLM output is not scrutinized, it can lead to serious issues such as cross-site scripting (XSS), remote code execution, or other security breaches in downstream systems.

Prevention strategies:

- Apply validation and sanitization on all LLM outputs before further processing.

- Use encoding techniques to prevent the execution of potentially harmful code embedded in LLM outputs.

- Limit the privileges of LLMs to reduce the risk of executing unintended operations.

Training Data Poisoning

Training data poisoning involves introducing malicious data into the LLM’s training process, which can lead to compromised model behavior, biases, or security vulnerabilities. This can occur during pre-training, fine-tuning, or embedding stages, where the model inadvertently learns from poisoned data, leading to unreliable or harmful outputs.

Prevention strategies:

- Vet and monitor the sources of training data rigorously to ensure integrity.

- Implement sandboxing techniques to isolate training processes and prevent unauthorized data ingestion.

- Use adversarial training and anomaly detection methods to identify and mitigate the impact of poisoned data.

Model Denial of Service

Model DoS attacks exploit the resource-intensive nature of LLMs by overwhelming them with complex or resource-heavy queries. This can degrade the model’s performance, increase operational costs, or render the service unavailable to legitimate users.

Prevention strategies:

- Implement input validation to filter out excessive or malformed queries.

- Enforce rate limiting on API requests to control the frequency and volume of queries.

- Monitor resource usage continuously and set thresholds to detect and respond to potential DoS attempts.

Supply Chain Vulnerabilities

Supply chain vulnerabilities in LLMs arise from the reliance on third-party models, datasets, and plugins that may be compromised. These vulnerabilities can introduce biases, security flaws, or even allow attackers to gain unauthorized access through the supply chain.

Prevention strategies:

- Perform due diligence on third-party suppliers, including regular audits and security assessments.

- Maintain an up-to-date inventory of all components using a software bill of materials (SBOM).

- Monitor and update all third-party dependencies regularly to mitigate risks from outdated or vulnerable components.

Sensitive Information Disclosure

Sensitive information disclosure occurs when LLMs inadvertently reveal confidential data through their outputs. This could result from overfitting during training, improper data sanitization, or other system weaknesses that allow sensitive data to leak.

Prevention strategies:

- Ensure thorough data sanitization and filtering mechanisms are in place during both training and inference stages.

- Limit the LLM’s access to sensitive data and enforce strict access controls.

- Regularly audit and review the LLM’s outputs to ensure no sensitive information is being exposed.

Insecure Plugin Design

Insecure plugin design can lead to significant security risks when plugins are not properly validated or controlled. Plugins might accept untrusted inputs or execute operations with excessive privileges, leading to vulnerabilities such as remote code execution.

Prevention strategies:

- Enforce strict input validation and use parameterized inputs within plugins.

- Apply the least privilege principle to limit plugin capabilities and access.

- Use secure authentication methods like OAuth2 for plugins and validate API calls rigorously.

Excessive Agency

Excessive agency refers to the risk posed by LLMs that are granted too much autonomy or functionality, enabling them to perform actions that could have unintended consequences. This often results from granting excessive permissions or using overly broad functionalities within LLM-based systems.

Prevention strategies:

- Limit the functionality and permissions of LLM agents to the minimum necessary.

- Implement human-in-the-loop controls for high-impact actions to ensure oversight.

- Regularly review and audit the permissions and functions granted to LLM-based systems.

Overreliance

Overreliance on LLMs occurs when users or systems depend too heavily on the model’s outputs without proper oversight or validation. This can lead to misinformation, security vulnerabilities, or other negative outcomes due to the model’s potential inaccuracies.

Prevention strategies:

- Regularly monitor and validate LLM outputs against trusted sources.

- Communicate the limitations and risks of LLMs clearly to users.

- Implement continuous validation mechanisms to cross-check LLM outputs.

Model Theft

Model theft involves the unauthorized access and exfiltration of LLM models, which are valuable intellectual properties. Attackers may steal model weights, parameters, or the model itself, leading to economic losses, compromised competitive advantage, or unauthorized use.

Prevention strategies:

- Implement strong access controls and encryption for LLM model repositories.

- Regularly monitor and audit access logs to detect any unauthorized activities.

- Restrict the LLM’s access to external networks and resources to reduce the risk of data exfiltration.

Related content: Read our guide to AI security risks

Best Practices for LLM Security

Here are a few security best practices organizations developing or deploying LLM-based applications.

Adversarial Training

Adversarial training is a proactive security measure aimed at enhancing the resilience of large language models (LLMs) against malicious input manipulations. This involves training the LLM using adversarial examples—inputs specifically designed to cause the model to make errors. By exposing the model to these challenging scenarios, it learns to better identify and resist similar attacks in real-world applications.

The process typically involves generating adversarial examples through techniques like gradient-based optimization, where small perturbations are applied to inputs in a way that significantly alters the model’s outputs. These perturbed inputs are then included in the training dataset, allowing the model to learn from its mistakes. Regularly updating the model with new adversarial examples ensures ongoing protection against emerging threats.

Input Validation Mechanisms

Input validation mechanisms are essential for ensuring that the data fed into an LLM is safe, appropriate, and within expected parameters. These mechanisms act as the first line of defense against attacks that attempt to exploit vulnerabilities in the model by submitting malformed or malicious inputs.

Effective input validation involves several steps:

- Data sanitization: Removing or neutralizing potentially harmful content such as SQL commands, scripts, or malicious code.

- Input filtering: Establishing rules to ensure inputs adhere to expected formats, lengths, and types, thereby preventing buffer overflows or injection attacks.

- Content segregation: Differentiating between trusted and untrusted sources, ensuring that inputs from less reliable sources undergo stricter scrutiny.

Secure Execution Environments

Secure execution environments provide a controlled and isolated setting for running LLMs, thereby minimizing the risk of unauthorized access, data leaks, or system compromises. These environments leverage technologies such as containerization, sandboxing, and virtual machines to create a secure boundary around the LLM’s runtime processes.

Key features of a secure execution environment include:

- Isolation: Segregating the LLM from other system processes and networks to prevent lateral movement in case of a breach.

- Access control: Implementing strict authentication and authorization protocols to restrict who can access the LLM and what actions they can perform.

- Monitoring and logging: Continuously tracking system activity to detect and respond to any unusual behavior or potential threats.

Adopting Federated Learning

Federated learning is a decentralized approach to training LLMs that enhances privacy and security by keeping data on local devices rather than centralizing it in a single location. In this model, the LLM is trained across multiple devices or servers, each holding its own dataset. The local models are updated independently, and only the model parameters, rather than the raw data, are shared and aggregated to form a global model.

This approach offers several security benefits:

- Data privacy: Sensitive data remains on the user’s device, reducing the risk of exposure during training.

- Reduced attack surface: Since data is distributed across many devices, it becomes harder for attackers to target a single point of failure.

- Improved compliance: Federated learning aligns well with data protection regulations like GDPR, which emphasize data minimization and user consent.

Implementing Bias Mitigation Techniques

Bias mitigation techniques are critical for ensuring that LLMs operate fairly by minimizing the impact of biases in the training data. LLMs trained on biased datasets can perpetuate and even amplify these biases, leading to discriminatory or harmful outputs.

Several strategies can be employed to mitigate bias:

- Diverse training data: Ensuring that the training dataset includes a wide range of perspectives and demographic representations to reduce inherent biases.

- Bias detection tools: Utilizing algorithms and frameworks that can identify and quantify bias within the model’s outputs, enabling targeted adjustments.

- Regular audits: Conducting ongoing evaluations of the LLM’s performance, particularly in sensitive areas, to identify and address any emerging biases.

Implementing AI/LLM-Based Security Solution for Detecting and Preventing AI Attacks

To effectively detect and prevent AI-driven attacks, integrating AI and LLM-based security solutions into an organization’s security infrastructure is essential. These solutions use the advanced capabilities of LLMs to analyze patterns in data, detect anomalies, and predict potential threats, improving the overall security posture:

- Anomaly detection systems powered by AI can continuously monitor network traffic, user behavior, and system interactions to identify suspicious activities. By leveraging LLMs’ ability to process and understand vast amounts of data, these systems can detect subtle deviations from normal behavior that may indicate an attack, such as social engineering attempts or malicious insider activities.

- AI-based phishing detection systems analyze emails and messages for indicators of phishing attempts. By using LLMs trained on large datasets of phishing and legitimate emails, these systems can identify subtle linguistic cues, tone inconsistencies, and contextual anomalies that traditional filters may miss.

- Automated response systems that integrate LLMs can be used to quickly analyze incidents and recommend or execute appropriate defensive actions. These systems help reduce the time between detecting and responding to attacks, which is critical for mitigating damage and preventing further exploitation.

LLM Security with Perception Point

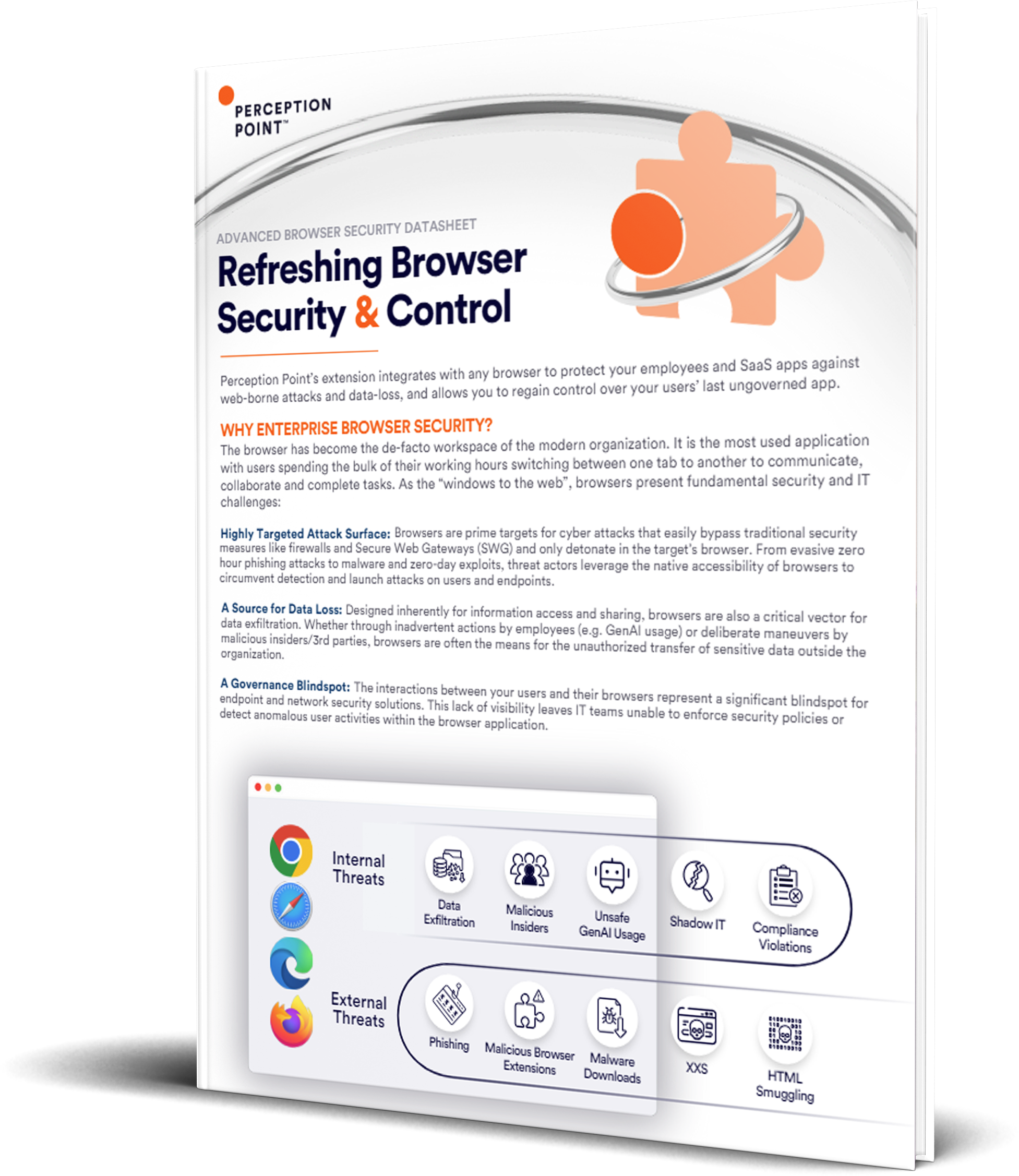

Perception Point protects the modern workspace across email, browsers, and SaaS apps by uniquely combining an advanced AI-powered threat prevention solution with a managed incident response service. By fusing GenAI technology and human insight, Perception Point protects the productivity tools that matter the most to your business against any cyber threat.

Patented AI-powered detection technology, scale-agnostic dynamic scanning, and multi-layered architecture intercept all social engineering attempts, file & URL-based threats, malicious insiders, and data leaks. Perception Point’s platform is enhanced by cutting-edge LLM models to thwart known and emerging threats.

Reduce resource spend and time needed to secure your users’ email and workspace apps. Our all-included 24/7 Incident Response service, powered by autonomous AI and cybersecurity experts, manages our platform for you. No need to optimize detection, hunt for new threats, remediate incidents, or handle user requests. We do it for you — in record time.

Contact us today for a live demo.

Implementing LLM security involves integrating offensive and defensive strategies during the model’s development lifecycle. This includes careful management of the training data, deploying secure infrastructure, and regularly updating systems to defend against emerging threats.

– Data Breaches

– Model Exploitation

– Misinformation

-Ethical and Legal Risks

Large language models (LLMs) are increasingly being exploited in social engineering and phishing attacks due to their ability to generate highly convincing and personalized content at scale. Attackers can use LLMs to automate the creation of phishing emails, making it easier to launch targeted campaigns that are more difficult to detect and prevent.

– Prompt Injections

– Insecure Output Handling

– Training Data Poisoning

– Model Denial of Service

– Supply Chain Vulnerabilities

– Sensitive Information Disclosure

– Insecure Plugin Design

– Excessive Agency

– Overreliance

– Model Theft

Here are a few security best practices organizations developing or deploying LLM-based applications.

– Adversarial Training

– Input Validation Mechanisms

– Secure Execution Environments

– Adopting Federated Learning

– Implementing Bias Mitigation Techniques

– Implementing AI/LLM-Based Security Solution for Detecting and Preventing AI Attacks