Google recently announced the full-scale launch of Bard Extensions, integrating the conversational generative AI (GenAI) tool into their other services. Bard can now leverage users’ personal data to perform myriad tasks – organize emails, book flights, plan trips, craft message responses, and much more.

With Google’s services already deeply intertwined in our daily lives, this integration marks a true step forward for practical daily applications of GenAI, creating more efficient and productive ways of handling personal tasks and workflows. Consequently, as Google releases more convenient AI tools, other web-based AI features are sprouting up to meet the demand of users now seeking browser-based productivity extensions.

Users, however, must also be cautious and responsible. As useful and productive as Bard Extensions and similar tools can be, they open new doors to potential security flaws that can compromise users’ personal data, among other yet undiscovered risks. Users keen on leveraging Bard or other GenAI productivity tools would do well to learn best practices and seek comprehensive security solutions before blindly handing over their sensitive information.

Reviewing Personal Data

Google explicitly states that its company staff may review users’ conversations with Bard – which may contain private information, from invoices to bank details to love notes. Users are warned accordingly not to enter confidential information or any data that they wouldn’t want Google employees to see or use to inform products, services, and machine-learning technologies.

Google and other GenAI tool providers are also likely to use users’ personal data to re-train their machine learning models – a necessary aspect of GenAI improvements. The power of AI lies in its ability to teach itself and learn from new information, but when that new information is coming from the users who’ve trusted a GenAI extension with their personal data, it runs the risk of integrating information such as passwords, bank information or contact details into Bard’s publicly available services.

Undetermined Security Concerns

As Bard becomes a more widely integrated tool within Google, experts and users alike are still working to understand the extent of its functionality. But like every cutting-edge player in the AI field, Google continues to release products without knowing exactly how they will utilize users’ information and data. For instance, it was recently revealed that if you share a Bard conversation with a friend via the Share button, the entire conversation may show up in standard Google search results for anyone to see.

Albeit an enticing solution for improving workflows and efficiency, giving Bard or any other AI-powered extension permission to carry out useful everyday tasks on your behalf can lead to undesired consequences in the form of AI hallucinations – false or inaccurate outputs that GenAI is known to sometimes create.

For Google users, this could mean booking an incorrect flight, inaccurately paying an invoice, or sharing documents with the wrong person. Exposing personal data to the wrong party or a malicious actor or sending the wrong data to the right person can lead to unwanted consequences – from identity theft and loss of digital privacy to potential financial loss or exposure of embarrassing correspondence.

Extending Security

For the average AI user, the best practice is simply to not share any personal information from still-unpredictable AI assistants. But that alone does not guarantee full security.

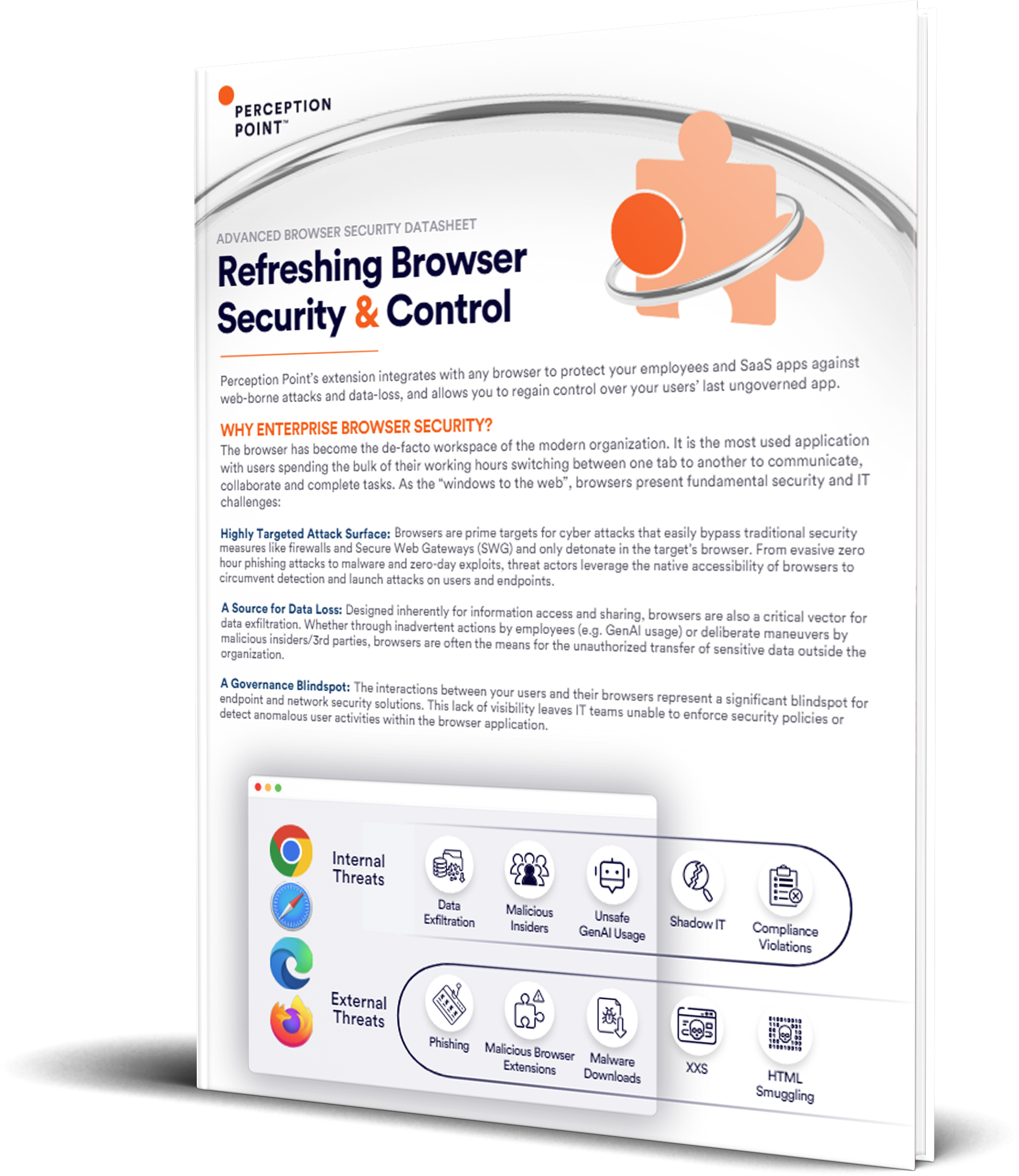

The shift to SaaS and web-based applications has already made the browser a prime target for attackers. And as people begin to adopt more web-based AI tools, the window of opportunity to steal sensitive data opens a bit wider. As more browser extensions try to piggyback off the success of GenAI – enticing users to install them with new and efficient features – people need to be wary of the fact that many of these extensions will end up stealing information or the user’s OpenAI API keys, in the case of ChatGPT-related tools.

Fortunately, browser extension security solutions already exist to prevent data theft. By implementing a browser extension with DLP controls, users can mitigate the risk of inviting other browser extensions, AI-based or otherwise, to misuse or share personal data. These security extensions can inspect browser activity and enforce security policies, preventing the risk of web-based apps from grabbing sensitive information.

Guard the Bard

While Bard and other similar extensions promise improved productivity and convenience, they carry substantial cybersecurity risks. Whenever personal data is involved, there are always underlying security concerns that users must be aware of – even more so in the new yet-uncharted waters of Generative AI.

As users allow Bard and other AI and web-based tools to act independently with sensitive personal data, more severe repercussions are surely in store for unsuspecting users who leave themselves vulnerable without browser security extensions or DLP controls. Afterall, a boost in productivity will be far less productive if it increases the chance of exposing information, and individuals need to put safeguards for AI in place before data is mishandled at their expense.

This article first appeared in Unite.AI, written by Tal Zamir on November 15, 2023.