What Is AI Security?

AI security encompasses measures and technologies designed to protect AI systems from unauthorized access, manipulation, and malicious attacks. These measures ensure that AI-powered systems operate as intended, maintain data integrity, and prevent data leaks or misuse.

Given the increasing reliance on AI systems, securing them is critical. AI security involves technical safeguards, like encryption and secure algorithms, and procedural measures such as regular audits and compliance checks.

Another meaning of AI security is the use of AI to improve security technologies, such as threat intelligence, intrusion detection, and email security.

This is part of an extensive series of guides about machine learning.

In this article

AI Security Risks

Here are some of the main security risks affecting AI systems.

Data Breaches

Data breaches are a significant concern for AI systems, as they often handle large volumes of sensitive information. If an AI system’s data storage or transmission channels are compromised, it could lead to unauthorized access to confidential data. This not only violates privacy laws but also can lead to significant financial and reputational damage for organizations.

AI systems must implement robust encryption methods and secure communication protocols to mitigate the risk of data breaches. Regular security audits and compliance with data protection regulations like GDPR and CCPA are essential to ensure that the data managed by AI systems remains secure from unauthorized access and leaks.

Bias and Discrimination

AI systems can perpetuate or even amplify biases present in the training data. This can lead to discriminatory outcomes in decision-making processes, such as hiring, lending, or law enforcement, causing ethical and legal issues.

Mitigating bias requires a multifaceted approach, including ensuring diverse and representative training data, implementing fairness-aware algorithms, and regularly auditing AI systems for biased outcomes. Organizations should establish ethical guidelines and oversight mechanisms to monitor and address potential biases in AI applications, ensuring they operate fairly and transparently.

Adversarial Attacks

Adversarial attacks involve manipulating input data to deceive AI systems into making incorrect predictions or decisions. These attacks exploit vulnerabilities in AI models by introducing subtle, often imperceptible changes to the input data, which can cause the model to misinterpret the data and produce undesired outputs.

To mitigate adversarial attacks, AI systems should incorporate adversarial training, where models are exposed to both normal and adversarial examples during the training process. This helps models learn to recognize and resist manipulation. Additionally, implementing robust input validation and anomaly detection mechanisms can help detect and thwart adversarial attempts.

Model Theft

Model theft, also known as model inversion or extraction, occurs when attackers recreate an AI model by querying it extensively and using the responses to approximate its functionality. This can lead to intellectual property theft and potential misuse of the model’s capabilities.

Preventing model theft involves limiting the amount of information that can be inferred from model outputs. Techniques such as differential privacy, which adds noise to outputs to obscure the underlying data, can be effective. Additionally, implementing strict access controls and monitoring usage patterns can help detect and prevent unauthorized attempts to extract model details.

Manipulation of Training Data

Manipulation of training data, or data poisoning, involves injecting malicious data into the training dataset to influence the behavior of the AI model. This can degrade the model’s performance or cause it to make biased or incorrect predictions.

To protect against data poisoning, it is crucial to maintain strict data curation and validation processes. Regularly auditing and cleaning training data can help identify and remove malicious inputs. Employing techniques such as robust learning algorithms, which are less sensitive to outliers and anomalies, can also enhance resistance to data manipulation.

Resource Exhaustion Attacks

Resource exhaustion attacks, including denial-of-service (DoS) attacks, aim to overwhelm AI systems by consuming their computational resources, rendering them incapable of functioning properly. These attacks can disrupt services and degrade performance, leading to significant operational issues.

Mitigating resource exhaustion attacks requires implementing rate limiting and resource allocation controls to prevent excessive usage by any single entity. Employing load balancing and scaling mechanisms can help distribute the computational load more evenly, ensuring that the system remains operational even under high demand. Regular monitoring and anomaly detection can also help identify and respond to such attacks promptly.

Tal ZamirCTO, Perception Point

Tal Zamir is a 20-year software industry leader with a track record of solving urgent business challenges by reimagining how technology works.

TIPS FROM THE EXPERTS

- Implement multi-layered AI defenses Combine different AI models for layered security. Use generative models for threat detection and discriminative models for behavior analysis. This multi-faceted approach ensures comprehensive protection against diverse threats.

- Deploy zero-trust architecture Implement a zero-trust security model that continuously verifies and authenticates every user and device accessing the AI systems. This minimizes the risk of insider threats and unauthorized access.

- Develop AI-specific threat intelligence Create and maintain a threat intelligence feed specifically for AI-related threats. This helps in staying ahead of emerging threats and enables timely updates to security measures.

- Regularly rotate and update encryption keys Frequently rotate encryption keys used in AI systems to protect data in transit and at rest. This practice minimizes the risk of key compromise and ensures robust data protection.

New Security Risks Raised by Generative AI

Beyond the standard security risks associated with using AI-based tools, generative AI also introduces several emerging risks:

Sophisticated Phishing Attacks

Generative AI can be leveraged to create highly sophisticated phishing attacks that are difficult to distinguish from legitimate communications. By using AI, attackers can craft personalized and convincing phishing emails or messages that target individuals based on their personal information, habits, and preferences. These AI-generated phishing attempts can adapt their language and tone to mimic trusted sources, increasing the likelihood of successful deception.

To counter this threat, organizations must implement advanced email filtering systems powered by AI to detect subtle signs of phishing. Additionally, regular training and awareness programs for employees about the evolving nature of phishing attacks are crucial. Ensuring that individuals can recognize and report suspicious activities can help mitigate the risks posed by sophisticated phishing attempts.

Direct Prompt Injections

Direct prompt injections involve manipulating AI systems by feeding them malicious inputs designed to alter their behavior or output. In the context of generative AI, attackers can craft specific prompts that cause the AI to produce harmful or misleading information. This can be particularly dangerous in applications where AI systems generate content or make decisions based on user input.

To protect against direct prompt injections, developers should implement robust input validation and sanitization techniques. Regularly updating and fine-tuning AI models to recognize and reject malicious prompts is also essential. Monitoring AI interactions and establishing a feedback loop to identify and address potential vulnerabilities can further enhance security.

Automated Malware Generation

AI systems, especially those with generative capabilities, can be exploited to create sophisticated malware.

By leveraging machine learning algorithms, malicious actors can develop malware that adapts to evade traditional detection methods, making it harder for security systems to identify and mitigate threats. Integrating AI-driven security tools that can recognize and counteract adaptive malware can help mitigate these risks.

LLM Privacy Leaks

Large language models (LLMs) can inadvertently memorize and reproduce sensitive information from their training data, or from user prompts, leading to privacy leaks. This risk is particularly concerning when LLMs are trained on large datasets that may include confidential or personal data. If not properly managed, these models can reveal private information in their responses, posing significant privacy risks.

To mitigate privacy leaks, it is important to employ differential privacy techniques during the training phase of LLMs. Ensuring that the training data is anonymized and carefully curated can reduce the risk of unintentional data exposure. Additionally, implementing strict access controls and monitoring the output of LLMs for potential privacy violations are critical steps in safeguarding sensitive information.

Learn more in our detailed guide to generative AI cybersecurity

Key AI Security Frameworks

OWASP Top 10 LLM Security Risks

OWASP’s Top 10 for Large Language Models (LLMs) identifies common vulnerabilities specific to LLMs, covering areas like data leakage, model inversion attacks, and unintended memorization. It provides a standardized checklist for developers and security professionals to audit and protect LLMs.

This framework helps in recognizing and handling the security challenges posed by the deployment of LLMs in various applications. It advocates for secure coding practices, regular vulnerability assessments, and integrating security controls during the design phase.

Google’s Secure AI Framework (SAIF)

Google’s Secure AI Framework (SAIF) is designed to enhance security across AI operations, emphasizing the need to secure AI algorithms and their operational environment. SAIF encompasses a range of security measures from initial design to deployment, including strategies for encryption, secure user access, and anomaly detection.

This framework also focuses on the continuity of AI systems, promoting resilient design that anticipates failures and mitigates them. The SAIF advocates for ongoing security assessments to adapt to emerging threats, ensuring that AI systems remain reliable over time.

NIST’s Artificial Intelligence Risk Management framework

NIST’s Artificial Intelligence Risk Management framework offers guidelines for managing risks associated with AI systems. It promotes a structured approach for identifying, assessing, and responding to risks, tailored for challenges like algorithmic bias or unexpected behavior. It supports consistent security practices across AI applications, improving transparency.

NIST’s framework encourages collaboration among stakeholders to share best practices and challenges, enhancing collective understanding and improvement in AI security.

The Framework for AI Cybersecurity Practices (FAICP)

The Framework for AI Cybersecurity Practices (FAICP), developed by the European Union Agency for Cybersecurity (ENISA), is designed to address the security challenges posed by the integration of AI systems across various sectors. The framework outlines a lifecycle approach, starting with a pre-development phase where organizations assess AI application scopes and identify potential security and privacy risks.

FAICP emphasizes the importance of data analysis for uncovering biases and vulnerabilities and recommends establishing a governance structure for overseeing AI security measures. In the development and post-deployment phases, the FAICP stresses the incorporation of security by design, including secure coding practices, rigorous testing, and transparent AI operations in line with international standards like ISO/IEC 23894.

AI Security Best Practices

Here are some tips for ensuring the security of AI systems.

Customize Generative AI Architecture to Improve Security

Customizing the architecture of generative AI models can significantly enhance their security. This involves designing models with built-in security features such as access controls, anomaly detection, and automated threat response mechanisms.

Additionally, conducting regular threat modeling and security assessments during the development phase helps identify and mitigate risks early. This proactive approach ensures that the AI system is resilient against attacks and unauthorized manipulation.

Harden AI Models

Hardening AI models involves strengthening them against adversarial attacks, which attempt to fool the models into making incorrect predictions. Techniques such as adversarial training, where models are trained with both normal and adversarial examples, can enhance their robustness.

Implementing defensive measures like input validation and anomaly detection can also help protect AI models from adversarial attacks. These strategies ensure that the models are less susceptible to manipulation, maintaining their accuracy and reliability in real-world applications.

Prioritize Input Sanitization and Prompt Handling

Input sanitization is critical for preventing malicious inputs from compromising AI systems. This involves validating and cleaning all data inputs to ensure they are free from harmful elements that could exploit vulnerabilities in the AI models. Establishing strict data validation protocols and using tools to sanitize inputs before they are processed by AI models helps prevent injection attacks and other malicious activities.

For generative AI systems, optimizing prompt handling is essential to prevent exploitation through malicious or misleading prompts. This includes implementing mechanisms to detect and filter out harmful or inappropriate prompts that could lead to unintended outputs. Using context-aware filtering and developing robust prompt management protocols helps ensure that generative AI systems respond appropriately and securely.

Monitor and Log AI Systems

Continuous monitoring and logging of AI system activities are crucial for maintaining security. By tracking system behaviors and logging interactions, organizations can detect and respond to anomalies or suspicious activities in real time.

Implementing automated monitoring tools that utilize AI to analyze logs and detect unusual patterns can enhance threat detection capabilities. Regularly reviewing logs and performing security audits ensures that potential issues are identified and addressed promptly.

Establish an AI Incident Response Plan

An AI incident response plan is vital for effectively managing security breaches and other incidents involving AI systems. This plan should outline procedures for detecting, responding to, and recovering from incidents, ensuring minimal disruption to operations.

Including clear roles and responsibilities, communication protocols, and recovery strategies in the incident response plan helps organizations respond swiftly and efficiently to AI-related security incidents. Regularly updating and testing the plan ensures preparedness for evolving threats, enhancing overall AI security.

How AI is Used in Security: AI-Based Security Solutions

There are several types of tools that can be used to secure AI systems.

Threat Intelligence Platforms

AI-powered threat intelligence platforms collect, process, and analyze vast amounts of data from various sources to identify potential threats. These platforms use machine learning algorithms to detect patterns and anomalies indicative of cyber threats, enabling proactive defense measures.

By leveraging AI, threat intelligence platforms can quickly process real-time data, providing actionable insights to security teams. This enhances the ability to predict and prevent attacks before they occur, reducing the overall risk to organizations. Continuous learning from new threat data ensures that these platforms adapt to evolving cyber threats.

Intrusion Detection and Prevention Systems

AI-enhanced intrusion detection and prevention systems (IDPS) monitor network traffic for signs of malicious activity. By analyzing data in real time, these systems can identify unusual patterns that may indicate an ongoing attack. AI algorithms enable IDPS to detect previously unknown threats by recognizing deviations from normal behavior.

Integrating AI with traditional IDPS improves detection accuracy and reduces false positives, allowing security teams to focus on genuine threats. Machine learning models can be trained on historical attack data to recognize complex attack vectors, enhancing the system’s ability to prevent breaches.

SIEM

Security Information and Event Management (SIEM) systems use AI to analyze security alerts generated by various applications and network hardware in real-time. AI-enhanced SIEM systems can correlate data from multiple sources to provide a comprehensive view of the security landscape, identifying potential threats more effectively.

By automating the analysis process, AI reduces the time needed to detect and respond to incidents, allowing for quicker remediation. Continuous learning capabilities enable SIEM systems to improve over time, adapting to new security challenges.

Phishing Detection

AI-based phishing detection tools analyze email content, metadata, and user behavior to identify phishing attempts. AI algorithms, in particular generative models, can detect subtle cues and patterns that indicate fraudulent messages, even if they mimic legitimate communications closely.

These tools can significantly reduce the risk of successful phishing attacks by automatically filtering out suspicious emails before they reach users’ inboxes. Integrating these tools with user training programs enhances overall organizational security by educating employees on how to recognize and report phishing attempts.

Email Security Solutions

AI-based email security solutions go beyond phishing detection, providing comprehensive protection against a range of email-borne threats, including spam, malware, and business email compromise (BEC) attacks. These solutions analyze email content, attachments, and sender behavior to identify and block malicious messages.

Machine learning algorithms help these systems adapt to new attack methods, ensuring continuous protection. By leveraging AI, email security solutions can offer real-time threat detection and response, minimizing the risk of successful attacks.

Endpoint Security Solutions

AI-driven endpoint security solutions protect devices like laptops, smartphones, and IoT devices from malware, ransomware, and other cyber threats. These solutions use machine learning to analyze behavior and detect anomalies, identifying potential threats before they can cause harm.

By continuously monitoring endpoint activities, AI-based security tools can detect and respond to threats in real time, preventing breaches and minimizing damage.

Combining AI with Human Expertise in Cybersecurity

Integrating human expertise with AI security tools leverages the strengths of both to create a stronger defense against cyber threats. AI systems can process vast amounts of data at incredible speeds, identifying patterns and anomalies that might indicate a security breach.

However, human experts are crucial for interpreting these findings, making strategic decisions, and addressing complex scenarios that require nuanced understanding and contextual knowledge.

By working together, AI can handle the heavy lifting of data analysis and threat detection, while human professionals apply critical thinking and experience to manage incidents and refine security protocols. Integrating AI and human expertise also enhances the adaptability and resilience of cybersecurity efforts. AI can continuously learn and adapt to new threats through machine learning algorithms, but these systems still need human oversight to ensure their outputs remain accurate and relevant.

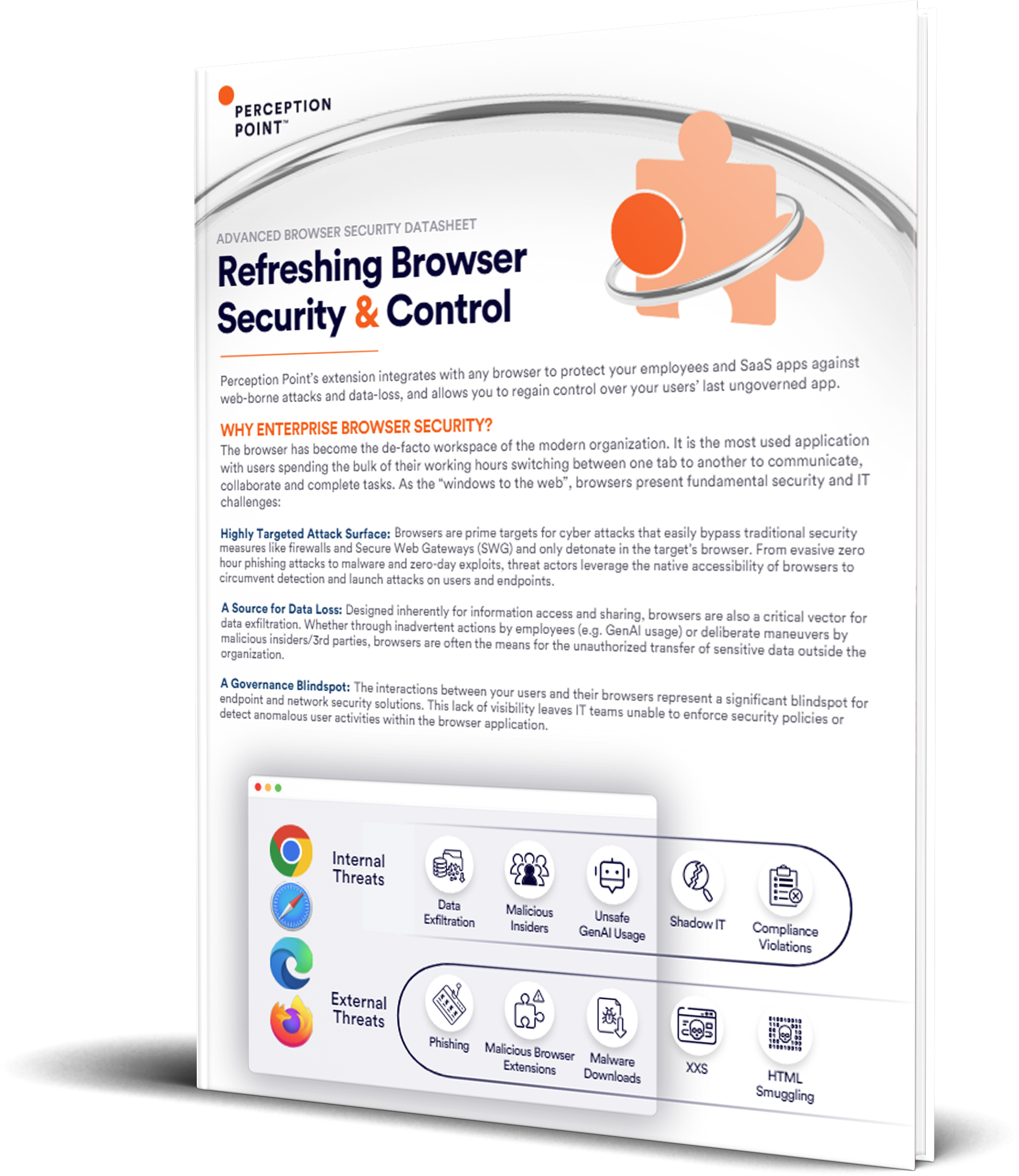

AI-Based Cybersecurity with Perception Point

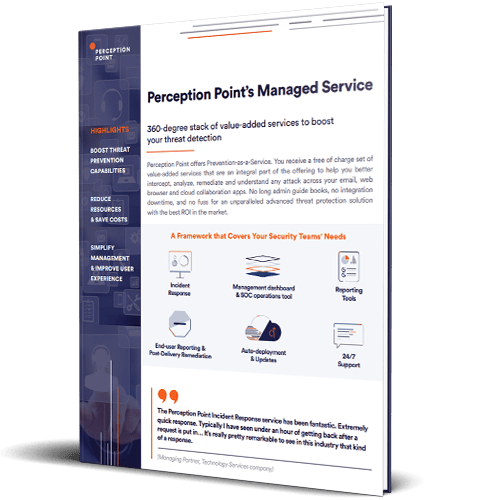

Perception Point uses AI to fight AI to protect the modern workspace by uniquely combining an advanced AI-powered threat prevention solution with a managed incident response service. By fusing GenAI technology and human insight, Perception Point protects the productivity tools that matter the most to your business against any threat.

Patented AI-powered detection technology, scale-agnostic dynamic scanning, and multi-layered architecture intercept all social engineering attempts, file & URL-based threats, malicious insiders, and data leaks. Perception Point’s platform is enhanced by cutting-edge LLM models to thwart known and emerging threats.

Reduce resource spend and time needed to secure your users’ email and workspace apps. Our all-included 24/7 Incident Response service, powered by autonomous AI and cybersecurity experts, manages our platform for you. No need to optimize detection, hunt for new threats, remediate incidents, or handle user requests. We do it for you — in record time.

Contact us today for a live demo.

See Additional Guides on Key Machine Learning Topics

Together with our content partners, we have authored in-depth guides on several other topics that can also be useful as you explore the world of machine learning.

Auto Image Crop

Authored by Cloudinary

- Auto Image Crop: Use Cases, Features, and Best Practices

- 5 Ways to Crop Images in HTML/CSS

- Cropping Images in Python With Pillow and OpenCV

Advanced Threat Protection

Authored by Cynet

- Advanced Threat Protection: A Real-Time Threat Killer Machine

- Advanced Threat Detection: Catch & Eliminate Sneak Attacks

- Threat Detection and Threat Prevention: Tools and Tech

ChatGPT Alternatives

Authored by Tabnine

- The best ChatGPT alternatives for writing, coding, and more

- Will ChatGPT replace programmers?

- Top 11 ChatGPT security risks and how to use it securely in your

organization

AI security encompasses measures and technologies designed to protect AI systems from unauthorized access, manipulation, and malicious attacks. These measures ensure that AI-powered systems operate as intended, maintain data integrity, and prevent data leaks or misuse.

Here are some of the main security risks affecting AI systems.

1. Data Breaches

2. Bias and Discrimination

3. Adversarial Attacks

4. Model Theft

5. Manipulation of Training Data

6. Resource Exhaustion Attacks

Beyond the standard security risks associated with using AI-based tools, generative AI also introduces several emerging risks:

1. Sophisticated Phishing Attacks

2. Direct Prompt Injections

3. Automated Malware Generation

4. LLM Privacy Leaks

1. OWASP Top 10 LLM Security Risks

2. Google’s Secure AI Framework (SAIF)

3. NIST’s Artificial Intelligence Risk Management framework

4. The Framework for AI Cybersecurity Practices (FAICP)

Here are some tips for ensuring the security of AI systems.

1. Customize Generative AI Architecture to Improve Security

2. Harden AI Models

3. Prioritize Input Sanitization and Prompt Handling

4. Monitor and Log AI Systems

5. Establish an AI Incident Response Plan

There are several types of tools that can be used to secure AI systems.

1. Threat Intelligence Platforms

2. Intrusion Detection and Prevention Systems

3. SIEM

4. Phishing Detection

5. Email Security Solutions

6. Endpoint Security Solutions