1. What is Microsoft Copilot?

Microsoft Copilot is a state-of-the-art generative AI tool integrated into Microsoft 365, designed to assist users in content creation. It operates by harnessing a wide array of data sources, including emails, documents, and calendar meetings, as input for generating text-based content. While it promises to revolutionize corporate communication and productivity, it comes with a set of web security challenges that necessitate careful consideration.

2. Information Risks Associated with Microsoft Copilot

Data Exposure and Privacy Risks:

Copilot‘s reliance on various data sources introduces the risk of generating content based on sensitive data, passwords, or personally identifiable information (PII). For example, imagine Copilot inadvertently generating an email that includes sensitive customer information, such as credit card details, without proper review, posing a risk of unintentional data exposure.

Cross-Client Data Leakage:

In scenarios where companies serve multiple clients, Copilot might negligently generate content that contains or is based on data from one client while preparing content for another. This cross-client data leakage violates privacy obligations and may damage client relationships. For instance, Copilot might draft a proposal for one client that includes proprietary information from another, causing potential client contract violations.

Reduced Resiliency to Social Engineering/Phishing:

As Copilot becomes integral to corporate communication, it can homogenize employee writing styles, adopting a more generic and formal corporate tone. This uniformity may make it harder for recipients to distinguish legitimate messages from phishing attempts. For example, an attacker could craft a convincing phishing email that closely mimics typical corporate communication. The lack of language inconsistencies can make these attacks more difficult to spot.

Lack of Data Loss Prevention (DLP) Labels:

Copilot’s generated content might not include appropriate DLP labels, making it challenging to identify and manage sensitive information. This can lead to untracked data breaches and non-compliance with regulatory requirements. For example, a report generated by Copilot might contain confidential financial data without the necessary DLP label, risking exposure.

Limited Audit Trail:

Compared to a human user, Copilot’s access to data may lack the robust audit trail needed for tracking and accountability, hindering the ability to trace actions back to specific users. In a real-world example, if Copilot accesses multiple sensitive documents in the name of an employee, it might be challenging to determine who initiated the action and the employee might be able to deny he triggered the action.

Risk of Hallucinations and Mistakes:

Another concern is the risk of Copilot generating content with errors or hallucinations. When an employee blindly publishes such content, it can have severe repercussions, affecting the company’s image and the accuracy of information shared with clients or stakeholders. For instance, Copilot might generate a press release with factual inaccuracies that damage the company’s reputation.

Copyright and Legal Implications:

Copilot’s content generation capability raises concerns related to copyright and legal issues. It may incorporate copyrighted materials into documents, putting the company at risk of legal disputes such as copyright infringement, leading to potential financial and reputational damages. For example, a Copilot-generated marketing brochure might include copyrighted images, leading to a lawsuit.

Amplifying User Errors:

One significant risk with Microsoft Copilot is the potential to amplify user file sharing errors. Imagine a scenario where an employee uses Copilot to draft an email and, in the process, unintentionally exposes sensitive data from a shared document. Copilot’s extensive access to data sources can magnify user content-sharing mistakes, potentially compromising data security.

Conflict with Company Values and Policies:

Generated content may not always align with a company’s values and policies. For instance, Copilot might create content that contradicts the company’s mission statement, potentially undermining the organization’s integrity and reputation. Copilot could draft a statement that promotes a product banned in certain regions, for instance.

Blind Trust in Output:

Users may fall into the trap of blindly trusting Copilot’s output, neglecting the importance of careful review. This complacency can lead to security and compliance breaches as sensitive data or inaccurate information is disseminated without scrutiny. For example, a marketing manager could send a promotional email generated by Copilot without realizing that it contains outdated pricing information, leading to customer dissatisfaction and revenue loss.

3. Recommendations for CISOs/CIOs

CISOs (Chief Information Security Officers) and CIOs (Chief Information Officers) play a pivotal role in mitigating the security risks associated with Microsoft Copilot. Here are some recommendations to address these concerns:

- User Education: Educate employees about Copilot’s capabilities and limitations. Encourage users not to rely solely on its output and to be cautious when generating content that involves sensitive data.

- Monitoring User Activity: Implement monitoring and warning systems to track user activity when using Copilot. Provide warnings when content appears to deviate from company policies or values.

- Data Loss Prevention (DLP): Ensure that DLP policies and mechanisms are in place to manage and protect sensitive information in Copilot-generated content. This includes proper labeling and tracking.

- Advanced Email Security Solutions: Companies should invest in advanced email security solutions capable of detecting subtle social engineering attacks. With the risk of generic writing styles introduced by Copilot, these solutions become vital in identifying and preventing sophisticated attacks.

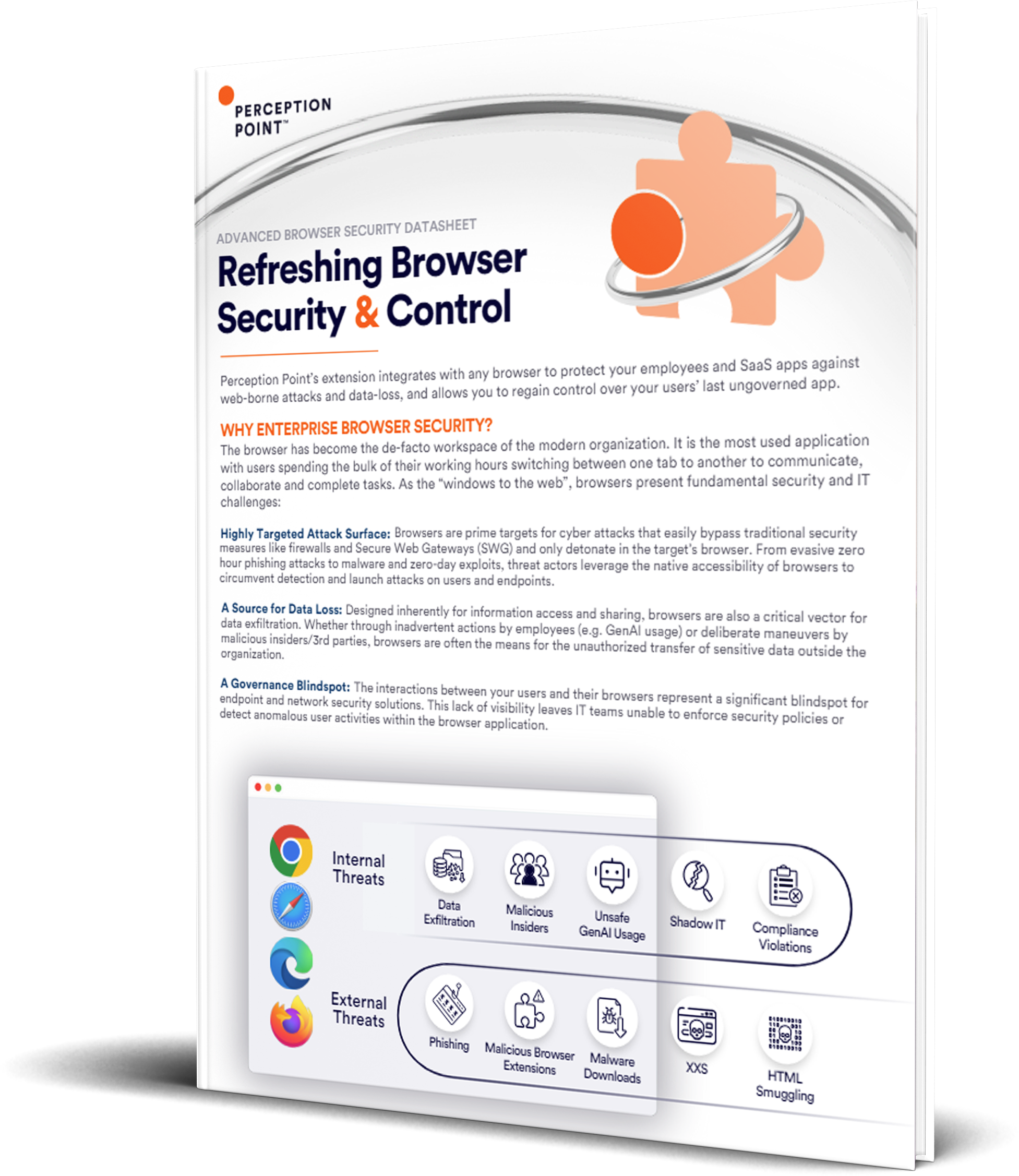

- Advanced Browser Security Solutions: Utilize advanced browser security solutions to block unwanted generative AI applications, assuming a sanctioned and safer option like Microsoft Copilot exists. These solutions ensure that only approved and secure AI tools are accessed, reducing the risk of data breaches and content errors.

By implementing these recommendations, CISOs and CIOs can help their organizations maximize the potential of Microsoft Copilot while safeguarding data, reputation, and client relationships. Vigilance and proactive measures are key to managing the complexities of AI-powered corporate communication effectively.

Learn how Perception Point’s Advanced Browser Security Extension can help protect your organization here.