GenAI: The New Frontier of Cyber Security

In the increasingly volatile threat landscape, the challenges cyber defenders are up against is perpetually evolving. Today, we stand at the brink of a new frontier – one defined by harnessing revolutionary AI technologies to create new malware and malicious social engineering ploys that are highly convincing and strategically crafted. This frontier is defined by Generative AI (GenAI), which is being exploited by threat actors to design and launch sophisticated, highly targeted attacks against organizations worldwide.

Understanding Generative AI and Its Capabilities

Before we delve into the risks, let’s first understand what Generative AI is. GenAI represents a collection of machine learning technologies capable of creating high-quality output that has traditionally required a human touch. Whether it’s writing captivating articles, crafting compelling stories, or even creating sophisticated deepfake videos, democratized GenAI has it all covered.

Large Language Models (LLMs) like OpenAI‘s ChatGPT, Google’s Bard, and others are now capable of generating content that’s almost indistinguishable from human-written texts. And while this is a fascinating achievement in the realm of artificial intelligence, it poses a significant threat in the wrong hands.

The Abuse of GenAI in BEC Attacks

Imagine receiving an email that looks like it’s from your boss, or from a trusted vendor you’ve worked with for years, or even your bank. It’s personalized to you, contextually relevant, and grammatically perfect. It’s convincing. Except it’s not exactly real. It’s a product of GenAI, carefully crafted by adversaries to manipulate emotions, build false trust, and ultimately, to “social engineer” the recipient of the email into falling for a scam, clicking a link, downloading a file, or even transferring money.

The Verizon’s Data Breach Investigations Report (DBIR) 2023 shows that social engineering attacks have become increasingly prevalent in 2023, in which Business Email Compromise (BEC) attacks have nearly doubled, accounting for over 50% of incidents involving social engineering techniques (phishing is a close second).

Impersonation, phishing, and especially BEC attacks are now being “supercharged” with the power of GenAI (particularly LLMs), allowing cyber criminals to work faster, and on a much larger scale than ever before. Previously time-consuming preparation work, such as target research and reconnaissance, “copywriting”, and design, can now be done within minutes by using well-crafted prompts. This means more potential victims and an increased likelihood of successful attacks. Perception Point’s 2023 Annual Report highlights an alarming 83% growth in BEC attack attempts in 2022.

Sample GenAI-generated BEC attack caught by Perception Point

A Critical Need for GenAI Detection Technology

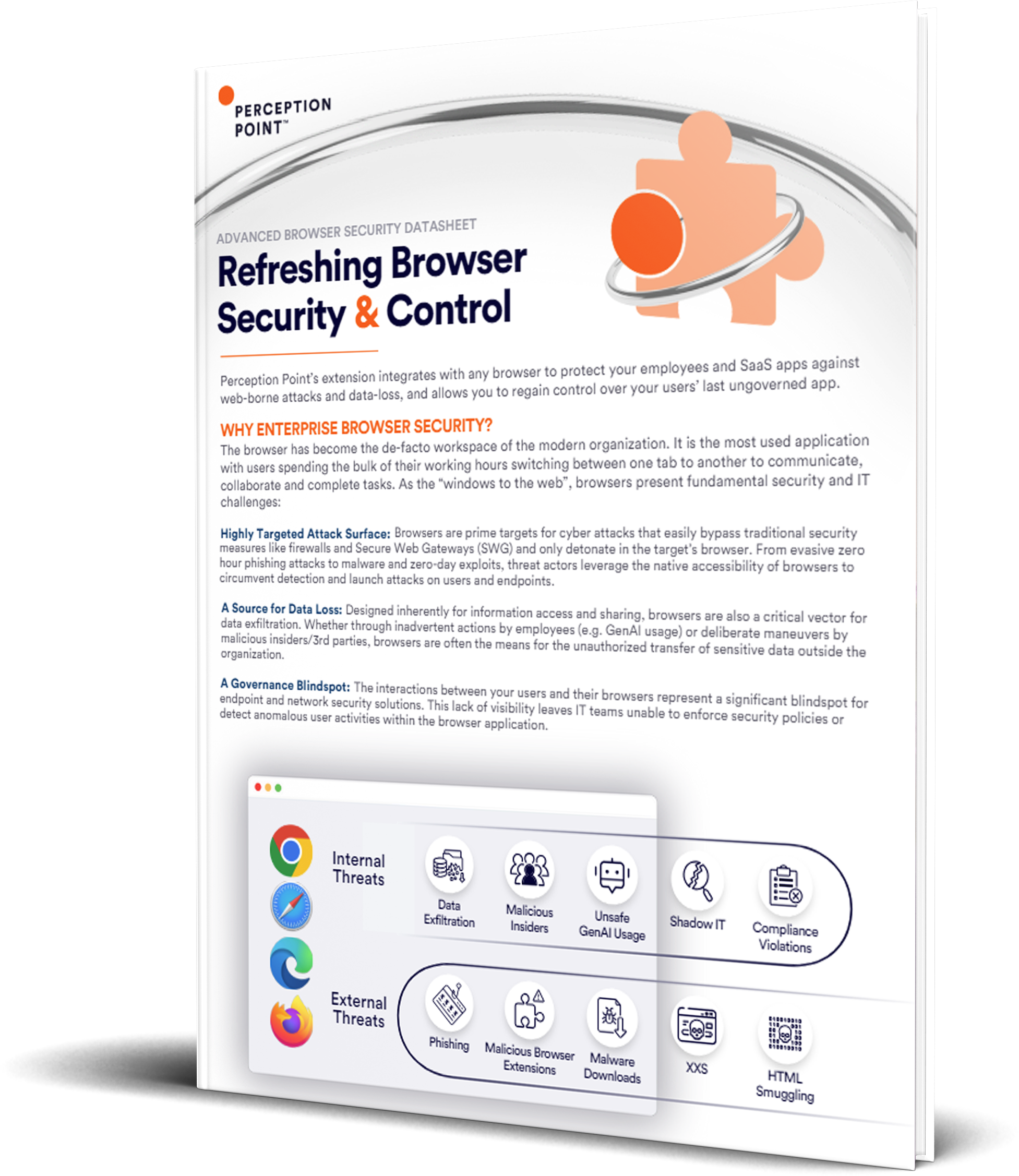

As GenAI continues to become more accessible, the risks it poses become increasingly significant. Traditional email security solutions, designed to detect typical indicators of phishing or other malicious emails, are struggling to keep up. They are not designed to handle the sophistication and subtleties of GenAI-generated content. This in itself highlights the need for a new breed of detection capabilities specifically designed to counter GenAI attacks.

Contextual and behavioral detection methods have shown effectiveness against traditional BEC and phishing attacks. However, they somewhat struggle against GenAI-powered attacks. GenAI can help craft personalized, contextually apt, and grammatically sound emails that mimic legitimate communication, bypassing the usual patterns these detection methods rely on. Moreover, if fed enough data, for example by leveraging “thread hijacking”, tools like ChatGPT can easily simulate the impersonated user behavior and writing style, further complicating detection.

Recognizing this growing threat, Perception Point has developed a state-of-the-art LLM-based detection model, specifically designed to identify the use of GenAI in malicious emails. This is a game-changing approach, augmenting the ability to protect organizations not just from the human-written email threats (sophisticated as they may be), but also from the up and coming new wave of machine-generated cyber attacks.

GenAI Decoded: Perception Point’s Answer to LLM-Generated Social Engineering

Perception Point’s innovative detection solution combats GenAI-generated social engineering and BEC threats by leveraging the power of Transformers, AI models capable of understanding the semantic context of text, very similar to the technology behind GenAI and LLMs (GPT-4, LLaMA, PaLM2, etc.). The strength of this approach lies in recognizing the repetition of identifiable patterns in LLM-generated text.

The innovative model uses these patterns to identify potential threats. It groups, or “clusters”, emails with similar semantic content, allowing it to distinguish between emails within the same group, and thus pinpoint patterns characteristic of LLM-generated content. The model was initially trained on hundreds of thousands malicious emails and is continuously trained on novel attacks.

How It Works

When an email is processed by Perception Point, the model evaluates its content and provides a probability score within milliseconds, ranging from 0 to 1. This score indicates the likelihood that the email was generated by an LLM and also its potential for malicious intent. In addition, the model delivers a descriptive textual analysis explaining the potential threat, if the content is determined as malicious in nature.

To illustrate this concept, imagine a popular LLM like ChatGPT is used to craft a well-written email impersonating a company CEO (the notorious “CEO Fraud”), a recurring theme in BEC attacks. Specifically, we would like AI-assistance for retrieving W-2 data (a type of US tax form filled with sensitive personal data) of a former employee, which can be later used to file fraudulent tax returns on the expense of the actual taxpayer.

The output ChatGPT generated looks pretty convincing. But what happens when we run it through the new detection model?

Perception Point extracts the textual content, analyzes the email’s text, identifies the LLM patterns, and provides a threat score and content analysis – within an average of 0.06 seconds.

Maximal GenAI Detection = Minimal False Positives

When it comes to detecting AI-generated malicious emails, there is an additional obstacle related to false positive findings. Many legitimate emails today are constructed with the help of generative AI tools like ChatGPT, while others are often built from standard templates containing recurring phrases (newsletters, marketing emails, spam, etc.) that highly resemble the product of LLMs.

Perception Point’s model, with its unique 3-phase architecture, is designed to identify harmful LLM-produced content, while keeping false positive rates to a minimum. In the first phase, as mentioned above, the model assigns a score representing the probability of the content being AI-generated. Following this, it categorizes the content using advanced Transformers and a refined clustering algorithm. Categories include BEC, spam, and phishing, with a probability score assigned for each.

In the final phase, the model integrates insights from the previous steps with additional numeric data, like the sender reputation and authentication protocols information (SPF, DKIM, DMARC). Based on these factors, it predicts if the content is AI-generated, and whether it’s malicious, spam, or clean.

Perception Point – AI-Powered Cybersecurity

The addition of Perception Point’s innovative AI technology to our multi-layered detection platform offers a formidable line of defense against GenAI-generated email threats. By leveraging the patterns in LLM-generated content, advanced image recognition, anti-evasion algorithms and patented dynamic engines, Perception Point serves as a proactive measure to neutralize and prevent these evolving threats from ever reaching end-users’ inboxes, strengthening the overall security of organizations globally.

Read “Fighting Fire with Fire: Combatting LLM-Generated Social Engineering Attacks With LLMs” to learn more about Perception Point’s innovative approach to combatting GenAI cyber attacks.